The chip war is here — and Google holds the high ground

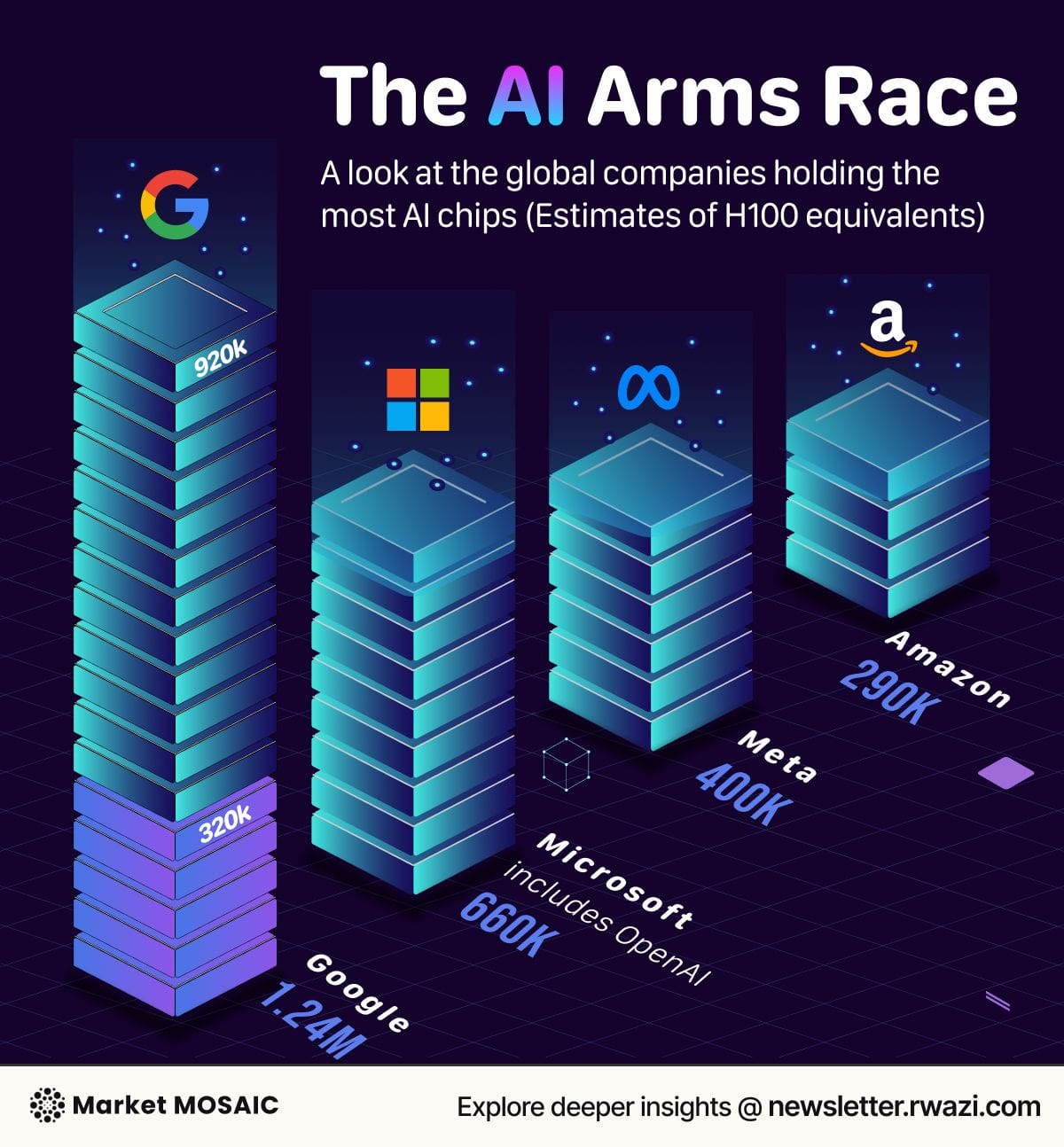

Google leads the AI chip war with 1.24M H100-equivalent chips, far outpacing rivals. In today’s AI race, compute power is the ultimate weapon, shaping innovation, market dominance, and the future of digital competition.

What Are AI Chips and Why They Matter

In the artificial intelligence race, chips are more than just hardware, they are the beating heart of innovation. These advanced semiconductors, such as Nvidia’s H100 GPUs and Google’s custom Tensor Processing Units (TPUs), are designed to handle the immense parallel computations needed to train and run the most sophisticated AI models. Unlike traditional processors, they can process vast volumes of data simultaneously, enabling faster training times, greater model complexity, and real-time responsiveness. In practical terms, these chips are the engines of AI progress, and in today’s technology landscape, access to them is as strategically important as oil was in the industrial age.

Google’s dominance in the AI compute race

Recent analysis of AI infrastructure capacity shows a clear and widening gap between Google and its competitors. Google commands an estimated 1.24 million H100-equivalent chips, a figure that includes some 320,000 of its own custom TPUs. This level of compute power places the company far ahead of Microsoft and OpenAI’s combined 660,000 chips, Meta’s 400,000, and Amazon’s 290,000.

The advantage is not merely theoretical. With such capacity, Google can train larger and more sophisticated models at speeds that others struggle to match, integrating them seamlessly into its ecosystem. From enhanced search results to AI-powered email assistance, predictive text, and advanced video recommendations, Google’s infrastructure enables it to deliver consumer experiences that quickly become industry benchmarks.

A company’s ability to innovate in AI is directly tied to how quickly it can train new models and how effectively it can deploy them at scale.

When a model that might take weeks to train on limited infrastructure can be completed in days with sufficient compute, the advantage compounds rapidly. Faster development cycles mean more sophisticated features, and more sophisticated features, in turn, reinforce market leadership. The cost efficiencies gained through scale also make it easier for leaders to roll out cutting-edge AI services to millions or even billions of users at once.

The growth of AI chip infrastructure

The rise of transformer-based AI models in 2017 marked the beginning of an unprecedented surge in demand for high-performance chips. Nvidia’s A100, followed by the H100, unlocked the ability to train models with trillions of parameters — the scale needed for systems like GPT-4 or Google’s Gemini Ultra. In just five years, the global AI chip market has expanded from under $20 billion to more than $70 billion, with hyperscale data centers driving much of that growth.

Every leap in chip performance has had an immediate impact on the AI industry. Tasks that were once considered research experiments, real-time translation, advanced image generation, multimodal reasoning are now practical and commercially viable. As chip technology improves, the scope of AI applications expands, touching sectors as diverse as healthcare, finance, logistics, and creative industries.

Ripple effects across the industry and beyond

The chip war is creating a new kind of digital divide. Companies with deep infrastructure resources are setting the pace of AI development, while smaller players often find themselves dependent on cloud partnerships with these same giants. The high cost of cutting-edge AI chips, which can exceed $30,000 each, raises the barrier to entry, concentrating power among a handful of technology leaders.

The implications stretch beyond corporate competition. Governments see AI chips as strategic assets, sparking geopolitical tensions and export controls aimed at securing domestic supply chains. Meanwhile, consumers, conditioned by leaders like Google to expect ever-more advanced AI capabilities, are raising the bar for the entire market. This combination of competitive, political, and consumer pressures ensures that the chip race will remain a defining feature of the technology sector for years to come.

The strategic imperative for business leaders

For CEOs, founders, and investors, the lesson is straightforward but urgent. AI infrastructure is no longer optional, it is foundational to long-term competitiveness. Companies without the resources to build massive chip reserves will need to secure access through partnerships, acquisitions, or strategic alliances. Those that fail to act risk not only lagging behind in innovation but also losing relevance in markets where AI capabilities are rapidly becoming a baseline expectation.

In the end, the race for AI dominance is not just about who can write the smartest algorithms. It is about who can build, control, and scale the most powerful engines to run them. And in that race, Google currently holds the high ground — with no signs of slowing down.