Why data privacy is the next big disruption in AI

In the AI race, trust is emerging as the real competitive edge. The future won’t belong to those who collect the most data, but to those who use it wisely, transparently, and with respect for privacy.

In today’s AI-powered world, the most valuable resource isn’t just innovation, it’s trust. And increasingly, that trust hinges on how companies handle data privacy.

Artificial intelligence has become the backbone of modern digital services. It powers everything from customer service bots and hiring platforms to healthcare diagnostics and financial forecasting. But the same algorithms that promise smarter decisions also demand vast amounts of personal data. As AI scales, so too does its appetite for information, information that is often collected, shared, and analyzed without full user awareness.

This growing data dependency is sparking a reckoning. Consumers are becoming more discerning, more vocal, and more protective of their digital footprint. A recent sentiment analysis revealed that 73% of users now consider data protection a deciding factor when choosing AI tools. Even more striking, 41% are ready to abandon a platform entirely if they discover it collects too much data. These numbers signal a major shift in user priorities. People are no longer just impressed by what AI can do, they’re increasingly concerned about what it demands in return, especially when it comes to their personal data. The question now is: is the value worth the privacy cost?

The Hidden Costs of Intelligence

What makes AI so effective also makes it inherently intrusive. Unlike traditional software, AI systems constantly learn and adapt, which means they rely on continuous data flows, often in ways that blur the lines of consent.

One of the most pressing concerns lies in unauthorized data usage. Many AI platforms harvest personal details without clearly informing users what’s being collected, how it’s processed, or where it might end up. From browser behavior and location tracking to voice recordings and biometric scans, the spectrum of data being scooped up is vast. Yet, the mechanisms for user consent are often buried in complex legal jargon, if they exist at all.

This covert data collection can have serious consequences. Individuals may be unknowingly subjected to targeted profiling, surveillance, or even identity theft. And when biometric data like facial recognition or fingerprints is involved, the risks are amplified. Unlike passwords, biometrics can’t be changed. A leak of such data doesn’t just compromise an account, it compromises a person’s identity permanently.

A Case Study in Contrast

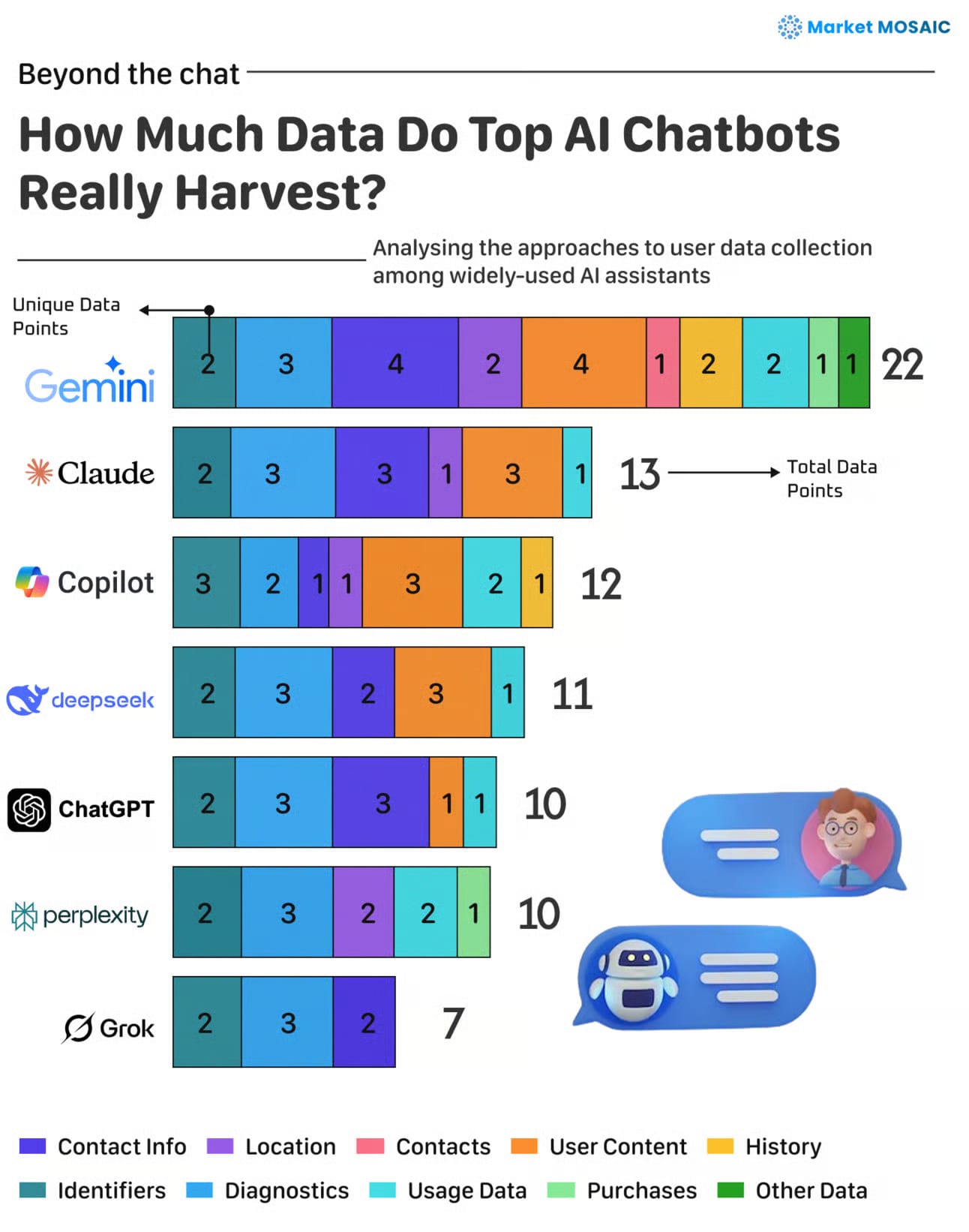

The differences in how AI platforms handle privacy are especially clear when you compare how much data various chatbots collect from users. Google’s Gemini, for instance, collects 22 different categories of user data. In contrast, Elon Musk’s xAI Grok gathers just 7. This gap is not just technical, it’s strategic.

While Gemini’s approach enables richer personalization, It also raises serious concerns about collecting more personal data than necessary, which many users see as an invasion of privacy. Platforms like Grok and Claude are gaining traction by positioning themselves as privacy-conscious alternatives, appealing to users who value restraint over raw capability. Their core message is simple: you get intelligent AI tools without sacrificing your privacy, less data collected, less intrusion into your personal life. These two approaches highlight a growing divide in the AI industry. On one side are platforms that treat data as fuel for performance. On the other, those that treat privacy as a product feature in itself. And in an environment where trust is becoming a competitive advantage, the latter group is gaining momentum.

Insight for business leaders

For business leaders, the implications are clear. Data privacy is no longer a back-office compliance issue, it’s a front-line business strategy.

Companies that embed ethical data practices into their core design will not only avoid regulatory penalties but will win deeper loyalty from increasingly informed users. Leading this shift means building AI with transparency, consent, and fairness at the forefront, not trying to add these values in later, after the product is already live. This is especially crucial as governments tighten regulations. The EU’s Artificial Intelligence Act, for example, demands full transparency for high-risk AI systems, while Nigeria’s NDPA is setting a precedent for how African markets handle data sovereignty. Organizations that align early with these frameworks will have a smoother path to global scalability and fewer surprises down the road.

Moreover, the financial risks of neglecting privacy are growing. High-profile data breaches, like the AI-driven healthcare breach in 2021 that exposed millions of patient records, have already resulted in heavy fines, public outrage, and plummeting user confidence. These are not isolated incidents, they’re warning signs of what can go wrong when data privacy is ignored.

As AI becomes part of everyday life, privacy is no longer optional, it’s the new standard.